Big 2017 Social Media Marketing Trends You Need to Know by @DannyNMIGoodwin

Here’s what 26 of the top marketing experts say will be the biggest trends you need to know in social media in 2017 – and beyond.

The post Big 2017 Social Media Marketing Trends You Need to Know by @DannyNMIGoodwin appeared first on Search Engine Journal.

![]()

How to Turn Social Media Data Into Engaging Local Content by @alexpanagiotopo

A step-by-step story of how a local agency was able to generate geographically-targeted, shareable, engaging content using public Instagram geodata.

The post How to Turn Social Media Data Into Engaging Local Content by @alexpanagiotopo appeared first on Search Engine Journal.

![]()

What you should look for in a SEO solution

With hundreds, thousands, tens of thousands, and even millions of pages, sites, and keywords to manage and optimize, SEO has become increasingly complicated and time-consuming. Using an SEO software platform can increase efficiency and productivity while reducing the time and errors involved in managing organic search campaign. Marketing Land’s “Enterprise SEO Platforms: A Marketer’s Guide” […]

The post What you should look for in a SEO solution appeared first on Search Engine Land.

Google’s results are no longer in denial over “Did the Holocaust happen?”

Criticized for listing a Holocaust-denial site first, Google’s results are finally changing — but probably due to external factors.

The post Google’s results are no longer in denial over “Did the Holocaust happen?” appeared first on Search Engine Land.

What exactly is a marketing cloud, and do you need one?

The term ‘marketing cloud’ has gained significant traction in the last few years as major software companies have sought to monetise the growing importance of technology for marketing teams.

But what exactly does this sometimes fuzzy term mean, and are companies actually benefiting from the type of all-singing and all-dancing solutions increasingly being developed by the likes of Adobe, IBM, Oracle and Salesforce?

ClickZ Intelligence has published a new piece of research entitled ‘What the Hell is a Marketing Cloud, Anyway?’ which, as the name suggests, seeks answers to these questions.

The report is based on interviews with senior client-side executives across a range of brands and a survey of almost 200 marketing professionals about the extent to which their businesses are capitalising on this technology.

For the purposes of the survey, carried out in September 2016, we defined a marketing cloud as ‘an integrated suite of marketing tools which are available to marketers as web-based services’.

Read on for a summary of the key takeaways from the report.

The marketing cloud is having a positive impact

There is good news for vendors and their clients in that the majority of responding organisations (52%) claimed to be using marketing cloud technology, and a further 27% of companies said they were considering this.

Furthermore, the research found that responding organisations are generally very positive about the marketing cloud, with 82% of respondents saying that marketing cloud technology has had a positive impact on their organisation’s marketing.

The overwhelming majority of respondents (84%) also agreed that an integrated suite of marketing tools has a positive impact on customer experience compared to only 5% who disagree. Similarly, 88% agreed that an integrated suite of marketing tools has a positive effect on business performance compared to only 3% who disagreed.

As the chart below shows, the reasons most commonly cited for using marketing cloud software are ‘reduced complexity’ (44%), a ‘single data source’ (40%), ‘better customer experience’ (38%), a ‘unified user interface’ (36%), ‘better visibility of return on investment’ (32%) and ‘improved ROI’ (30%).

Methodology note: respondents could check up to three options

Analytics are the bedrock of an effective marketing technology suite

Within the marketing cloud, a number of capabilities were considered by respondents to be critical, including CRM (59%), marketing automation (49%) and personalisation/targeting tools (46%).

However, the largest percentage (68%) felt that customer and digital analytics were the most critical core product within a unified suite of marketing tools, underscoring the importance of consistent and insightful data for underpinning the best marketing cloud offerings in the market.

Is there a downside to a marketing cloud?

The research also sought to understand whether there were disadvantages of using a single technology supplier.

While aiming to solve many of the current challenges marketers face, marketing clouds aren’t always the answer to everything. Few would expect a single, largely generic (though modifiable to varying degrees) technology solution to solve every single individual problem specific to each brand. As a result, executives felt its biggest failing was not to be ‘best of breed’ in all areas (36%).

You can download the report now to read in more detail about the essence of the marketing cloud, and also which vendors are most commonly being deployed.

The research also found that many marketers believe that vendors were not as transparent as they could be, despite educational efforts by vendors purporting to shed some light on the chaotic world of marketing technology. Over a third (35%) ‘somewhat’ disagreed that vendors were open about the pros and cons of the marketing cloud, and a further 5% ‘strongly’ disagreed.

Despite some suspicion of the way software companies are marketing their cloud offerings, giant strides have undoubtedly been taken by vendors to create more joined-up platforms following a string of acquisitions – some of which have taken longer than others to be truly integrated.

Senior executives interviewed for this research were keen to stress that marketing technology is not a panacea, although it can certainly make businesses more efficient.

Pete Markey, Brand Communications & Marketing Director at Aviva said he welcomed the ongoing use of automation in marketing, but warned against marketers hitting autopilot as the technologists take over.

“We’re all thinking about future-proofing. It’s not bad having a single provider as long as you have a sense of how technology is going to develop into the future. You don’t want to outsource your intellectual property. You don’t want technology to do the thinking for you to the point where you’re not keeping up with how you do what you do. Technology is a tool. It’s down to the marketer to protect the strength of their organisation.”

Fill in the form below to download your free copy of the marketing cloud report.

var data = {“action”:”get_marketo_form”,”form_id”:”1481″,”uniqid”:”5858f4204553a”,”form_args”:{“redirect_url”:””,”prefill”:true,”push_down”:”#subscribedtoCZSEWMasterMarketing”,”ty”:”PGJyIC8+ClRoYW5rIHlvdSEgV2UgaGF2ZSBqdXN0IHNlbnQgeW91IGFuIGVtYWlsIHdpdGggdGhlIGxpbmsgdG8geW91ciByZXBvcnQ8YnIgLz4K”,”lead”:[]}};

data.form_args.filter_value = getCookie(‘_mkto_trk’);

jQuery.post(

MyAjax.ajaxurl,

data,

function (response) {

jQuery(“.cnt-marketo-form-container-5858f4204553a”).html(response);

}

)

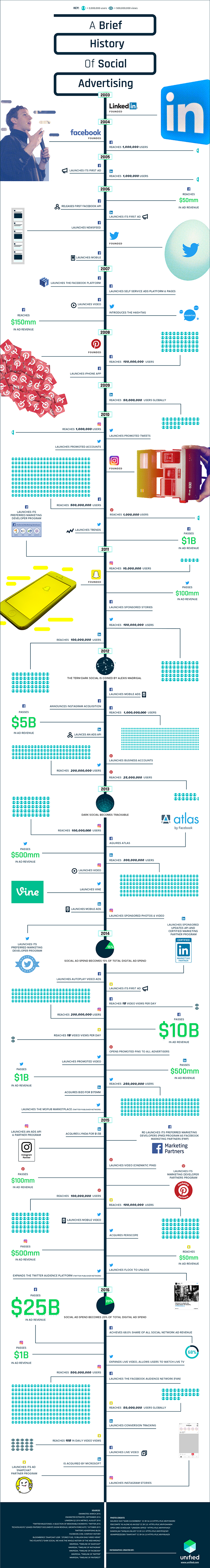

A Brief History of Social Advertising [Infographic]

Social advertising is a big business.

Over the past two years, social media advertising budgets have doubled worldwide, from $16 billion U.S. in 2014 to $31 billion in 2016.

While Facebook currently claims nearly 70% of the social advertising market, it’s starting to get some serious competition from younger networks. Twitter, Instagram, LinkedIn, Pinterest, and Snapchat are all vying for their piece of the social ads pie, and advertisers today have more options than ever before to promote their brands on social.

From mobile to live video and everything in between, we’ve documented some of the most significant milestones for both these top networks as well as social advertising in general.

Check out the below infographic on the history of social advertising and consider: What opportunities and challenges will brands face in the next 10 years?

![]()

How to Create a Growth Hacking Framework

Have you heard about the Fake It Hack? How about the Exclusivity Hack? The Aha Moment Hack?

These are a few of the tactics you’ll read if you Google “growth hacking.” Don’t worry if you haven’t heard of them before. You don’t need to know about them yet.

The only thing you need to know right now is how to create a scalable and repeatable process so you can efficiently execute, test and learn from these growth hacks. At least that’s what HubSpot’s former VP of Growth Brian Balfour would say.

According to Balfour’s Slideshare, when it comes to growth hacking, focus on process first, tactics second.

This makes sense because what works for one company will not automagically work for you. Your business model is probably different. Your audience may be different. Your customer journey – you guessed it – is different.

Because Googling for growth hacks is so much more fun than actually carving out yet another process, there’s a million and one mega lists of tactics and virtually no articles on how to create a repeatable system for testing your ideas. In this post, you’ll learn the latter.

First, I’ll discuss why you need a growth process and detail the key documents you’ll need to create. And finally, I’ll outline a step-by-step system for testing your growth hacking ideas.

Shall we?

Why Create a Process?

People + Process = Growth Hacking

Assuming you have good people, you need to give them autonomy to decide what to work on and goals to hold them accountable to.

By establishing a process, you establish a rhythm, allowing your team to efficiently execute experiments and then learn from those experiments. As you run more experiments, you’ll have more learnings to document in your playbook.

Why compile your learnings?

Because what if a key team member leaves, and they weren’t documenting their experiments? Then they leave with key intel, and all those experiments were useless.

The 7-step Process

So you know you want to grow, but you need to be more specific than that.

1. Define Objectives and Key Results (OKRs)

OKRs is a method of defining and tracking objectives and their outcomes. Its main goal is to connect company, team and personal objectives to measurable results, making people move together in the right direction.

Objectives

“It’s like goals served in a fractal manner.” — Eric, founder of Pebble

What do you hope to accomplish?

According to Google, objectives, sometimes referred to as “stretch goals,” should be fairly uncomfortable and quite ambitious. Objectives should be granular enough that you’ll easily know if it has been achieved or not. Here’s a few tips for setting objectives.

Pick three to five objectives.

Push for new achievements.

Make it specific enough to be able to see the end in sight, meaning it should be obvious if a result was achieved or not.

Key results

Key results are the specific numbers, based on objectives, you aim to meet in a specific period of time. Here’s a few tips for picking key results.

Define three key results for every objective.

Key results are measurable milestones that will directly inch nearer to reaching the objective.

Describe outcomes, not activities.

There must be proof you reached your key result. Evidence should be easily accessible.

OKR example

Here’s an OKR example of Uber.

2. Brainstorm ideas

Next, you need to brainstorm all of the possible avenues to reaching your objectives. If Uber wants to increase its driver base in each region by 20 percent then it needs to brainstorm ways to achieve this key result.

Uber could generate ideas by researching how its competitors are securing more drivers.

Once ideas are generated, its team could try to poke holes in them by asking questions like, why, what if… and what about….

Its team could even look outside its industry and look at how other marketplaces are increasing its supply side because the more dots you have the more ideas you have.

Last but not least, make sure you’re talking with other growth teams to see what’s working for them that might work for you.

To do:

Record a backlog of your ideas.

You don’t want to lose any of your ideas so make sure to record them in a safe place. I prefer to use Trello myself, but a spreadsheet works too.

3. Prioritize

Now, it’s time to decide which ideas you’ll focus on and test. Here’s a few elements to consider during the prioritization process.

What’s the probability that it will work? Is it a low chance (20 percent), medium (50 percent) or high (80 percent)?

What’s the potential impact? This comes from your hypothesis, and you should take into account long-lasting effects versus one-hit wonders.

How much (and what) resources will this require? You must consider teams that will be needed – marketing, design, engineering – and the amount of time that will be required – one hour , half a day, one day, one week.

4. Test

This is where your experiment doc comes in handy.

First and foremost, what’s your hypothesis? Here’s what a hypothesis reads like.

If successful, [variable] will increase by [impact], because [assumptions].

Make sure to justify your assumptions with quantitative (previous experiments, surrounding data, funnel data), qualitative (surveys, support emails, user testing, recordings) and secondary information (networking, blogs, competitor observations, case studies).

To do:

Create an experiment doc.

Google Docs works nicely for this because it allows you to easily collaborate with your team. Your experiment doc should have the following information:

Objective: Qualitative statement

Timeframe: Between 30 and 90 days

KR1: Measurable goal one (hit 90 percent of the time)

KR2: Measurable goal two (hit 50 percent of the time)

KR3: Measurable goal three (hit 10 percent of the time)

Here’s a link to an experiment doc in Google Docs I created for you. Feel free to make a copy and use it.

5. Implement

Execute your experiments between 30 and 90 days, and measure weekly results.

6. Analyze

What did you learn? Here’s a few questions to help you analyze your experiment.

What was the impact of this experiment?

How accurate were your predictions?

Why did you see the results you saw?

Once you answer these questions, document your findings at the end of your experiment doc so you can go back and review in the future.

Upgrade your analytics with Kissmetrics Understand more than just what’s happening on your site. Kissmetrics Analyze shows you where you’re losing customers, which segments are sending you the most revenue, and how to get the most out of your marketing dollar.

7. Systemize

If your experiment was successful, document your step-by-step process into a “playbook” so you can turn this into a streamlined, repeatable process.

Uber created a playbook for sabotaging Lyft, for example, and it also has a playbook for launching in new cities, since this is something the popular app does all the time.

What Tools Can Help?

I’m so glad you asked. There’s a lot of tools you can use to simplify this process.

GrowthHackers Projects

For $9 per month, you can use Sean Ellis’ GrowthHackers Projects, which is a full-fledged growth hacker system in the form of a web app.

A nice benefit of GrowthHackers projects is it’s connected to GrowthHackers network, which means you can save ideas from the community with the click of a button.

Trello + Google Docs

There’s a lot of Trello growth hacking templates you can copy, such as this one and this one.

Airtable

Airtable is a new, good-looking spreadsheet software. It’s pretty neat. Here’s a growth hacking template for it.

Asana

If you already use Asana, you could just create another project, and use it for your growth hacks. Templana even offers an Asana template with 40 growth hacking tactics to get you started. Also, a few of these free templates by Asana might help you as well.

Growth Hacking is About the Process, Not the Tactics

Woohoo! You’ve carved out a process for growth hacking, which means you’re way ahead of your competitors, since most marketers don’t even have a documented content strategy in place.

Now, you can go wild researching growth hacking tactics. You’re welcome.

About the Author: Lauren Holliday is the founder of Freelanship, which offers 31 pre-packaged marketing gigs, and she’s the creator of the Full Stack Marketer, a full-stack marketing course that helps people learn by doing freelance projects. She’s also the managing editor at Toptal, an an exclusive network of the top freelance software developers and designers in the world.

Mantova, Italy's Capital of Culture 2016, on Google Arts & Culture

The City of Mantova, the Italian Capital of Culture 2016, unveils its story along with its finest cultural treasures and natural beauty on Google Arts & Culture.

With your smartphone or computer, start exploring the wonders of the Palazzo Te: admire the lively details of the frescos of the Chamber of the Giants and use Google Cardboard to step in the room and visit all the other iconic places of Mantova, immersing yourself in its heritage in 360°.

For the first time in Italy, the use of the Art Camera made it possible to bring online in ultra high resolution 50 paintings from the Palazzo’s collection including the enigmatic Portrait of Giulio Romano by Titian.

Detail of the Portrait of Giulio Romano

You will be amazed by the majestic ceiling of the Teatro Bibiena, haunted by the spirit of Mozart who played the opening concert the 16th of January 1770. Leaf through the books of the Biblioteca Teresiana to find the verses of the Songbook for Isabella d’Este, a great Renaissance woman, or the illuminated pages of the invaluable manuscript from the library of the monastery of Saint Benedict in Polirone. Then, take a walk inside the Palazzo del Podestà, currently undergoing restoration, a work in progress that allows us to track the successive transformations and functions of the buildings.

The majestic ceiling of the Teatro Bibiena

Yet the treasures of Mantova are not limited to the inside of its palaces. The City is itself an open-air museum inviting the user for a walk to discover its magnificent sights, its story, tradition and tastes.

With the end of the year approaching, Mantova will soon pass on its title of Italian Capital of Culture to another Italian city, but its timeless artworks and wonders will remain accessible to anyone online on the Google Arts & Culture platform. Visit it at g.co/mantova2016

Reflecting on Google's GNI Engagement

As the year comes to a close, we’re reflecting on Google’s Global Network Initiative (GNI) assessment and some of this year’s important developments in our work to protect the free expression and privacy interests of our users.

Last week, in our continued effort to increase transparency around government demands for user data, we made available to the public the National Security Letters (NSLs) Google has received where, either through litigation or legislation, we have been freed of nondisclosure obligations. Our goal in doing so is to shed more light on the nature and scope of these requests. We’ve also supported policy efforts to ensure that the privacy interests of non-U.S. persons are addressed as U.S. policymakers consider government surveillance issues.

Earlier this month, we highlighted our efforts to comply with the right to be forgotten in Europe. For much of the last year, we’ve worked to defend the idea that each country should be able to balance freedom of expression and privacy in the way that country sees fit, and not according to another country’s interpretation. One Data Protection Authority, the French Commission Nationale de l’Informatique et des Libertés (the CNIL), ordered Google to delist French right to be forgotten removals for users everywhere. We agree with the CNIL that privacy is a fundamental right — but so, too, is the right to free expression. Any balance struck between those two rights must be accompanied by territorial limits, consistent with the basic principles of international law.

These are some examples of Google’s public policy work that illustrate our commitment to the freedom of expression and privacy rights of our users. We know that pressing global issues are best addressed in partnership with with key stakeholders — and the GNI is critical to Google’s efforts.

The GNI is at the core of our multi-stakeholder engagement on free expression and privacy issues. Google is proud to be a founding member of the GNI, an initiative that brings together ICT companies with civil society organizations, investors, and academics to define a shared approach to freedom of expression and privacy online. The GNI provides a framework for company operations, rooted in international standards; promotes accountability of ICT sector companies through independent assessment; enables multi-stakeholder policy engagement; and creates shared learning opportunities across stakeholder boundaries.

Earlier this year, GNI released the second round of assessments, and announced the board’s determination that Google is compliant with the GNI framework. The assessment is an important tool for companies, NGOs, academics, and others working together to review how companies address risks to privacy and free expression.

The assessment process includes a review of relevant internal systems, policies and procedures for implementing the GNI Principles (“the process review”), and an examination of specific cases or examples that show how the company is implementing them in practice (the “case review”).

Our cases were selected for their salience to our approach to implementing the GNI Principles, taking into consideration Google’s products and services, geographical footprint, operating environments, and human rights risk profile. In addition, to the Google-specific cases discussed in GNI’s public assessment report, we wanted to provide additional examples to illustrate the types of non-U.S. cases reviewed.

Request for user data

A request was made for Gmail user information by a federal police department. A key part of our process is making sure that the requests we receive are appropriately supported by legal process. In this case, we found that the initial request was inadequate due to failure to have a judicial stamp or signature, and we therefore pushed back, as we would not comply unless the request was judicially authorized. Once these items were obtained and, we determined that it was a valid legal request (including that it was not overbroad), we complied with the request.

Request for removal

A request for Blogger content removal was made by a regulatory agency. The requestor claimed that content was subject to removal under the country’s statute prohibiting appeals to mass riots, extremist activities, and mass actions against established order. In reviewing the request, we determined that the content did not violate our terms of service. We then responded by requesting a copy of the decision citing specific URLs that are illegal. This would be evidence of an authoritative interpretation of the local law as applied to the content. As there was no response from the requestor, and the content did not violate our company policies, the request was denied and we did not remove the material.

RTBF: Push for Judicial Review; Careful Development and Implementation of Rigorous Removal Process for Requests

This example describes how we responded to requests subsequent to the Google Spain v AEPD and Mario Costeja ruling, which presented risks to freedom of expression. In the Costeja case, we appealed through the court process, but were unsuccessful. We pushed back on this ruling because we considered the requirement for Google to take down this information to be in conflict with freedom of expression. On appeal, the Court of Justice of the European Union found that people have the right to ask for information to be removed from search results that include their names if it is “inadequate, irrelevant or no longer relevant, or excessive.” In deciding what to remove, search engines must also have regard to the public interest, without additional guidance regarding what information constitutes “public interest.” The court also decided that search engines don’t qualify for a “journalistic exception.” We continue to fight court cases seeking to expand this requirement for takedowns globally.

We also convened the Advisory Council to Google on the Right to be Forgotten to review input from dozens of experts in meetings across Europe, as well as from thousands of submissions via the Web. The Council included Frank La Rue, the UN Special Rapporteur on the Promotion and Protection of the Right to Freedom of Opinion and Expression. The Council advised us on performing the balancing act between an individual’s right to privacy and the public’s interest in access to information.

In response to the Costeja ruling, Google established a dedicated team to develop and implement a system to remove valid RtbF requests. We evaluate each request appropriately, complying with the law, but making sure that, if there is a legal basis for the content to remain available, we will assess how that applies. To address the ruling, we assembled a team to address the new category of requests arising from the rights articulated in Costeja. Our web removals site was updated to include information about and a portal for RtbF requests. Requests are reviewed by the legal removals team; after review, the requester is notified of the determination. Since implementing this system, we have delisted approximately 780,000 URLs. Our process responds to individual requests and carefully evaluates each request against the criteria for removal. We also notify websites when one of their pages has been removed pursuant to a RtbF claim. In addition to removing URLs, we include information about RtbF requests and removals in our Transparency Report.

Our assessors also provided us with recommendations for enhancing our implementation of the GNI Principles. These recommendations, combined with feedback and ongoing engagement with GNI stakeholders, will inform our policies and practices and strengthen our advocacy in 2017.

Why Split Testing is Killing Your Conversion Rate

If your conversion rate is flat-lining and you’ve tried virtually everything to stop its downward spiral – split testing could be to blame.

Yes, split testing – that incredible flip-switching, conversion-boosting, audience-wooing strategy that boasts major lifts – but seldom delivers on its promises – could be the very issue that’s causing even the best-planned campaign to fall on its face.

Now, before you grab your digital torches and pitchforks, I want to clarify right from the start that the idea of split testing itself – discovering your customers’ preferences, pushing their psychological hot-buttons and giving them the best possible experience from start to finish – is a solid and sound strategy. One that every business should wholeheartedly embrace.

But unfortunately, many split testing services only give you one piece of the puzzle. Let’s dig a little deeper to see what’s causing the problem and how you can fix it – starting today.

The Big Split Testing Myth

The premise is simple: create two (or more) versions of a page. Divide traffic equally between them and gauge the results. But here’s where things get real and the flaws in the system are exposed.

You’re supposed to automagically know what to do with those results, when in fact the information you’re collecting comes in the form of a piecemeal package that tries to convince you that one option is better than another.

As an example, SumAll ran an Optimizely test with two of the exact same versions of their pages. Optimizely predicted the first version was beating the second by nearly 20%.

(Thankfully, Optimizely has since reworked their statistics system to prevent gaping flaws like this one from muddying the results).

But this illustrates a major issue in split testing – which is that you can predict large, grandiose upticks in performance all you want – but if those don’t translate into actionable steps that have a direct effect on revenues, they might as well be useless.

The Sneaky, Statistical Secret “They” Don’t Want You to Know About…

At the risk of sounding too much like the conversion optimization version of Kevin Trudeau, most split testing and optimization companies aren’t out to vacuum your bank accounts and leave you with nothing to show for it. It’s not a giant, covert operative designed to feed you “feel good numbers”.

But there is a wrench in the works when it comes to the kind of split testing done by these various platforms. Some, like Visual Website Optimizer and Optimizely, do what are called “one-tailed” test, while others, like Adobe Test&Target and Monetate, do “two-tailed” tests.

So what’s the difference between them, and why should you care?

SumAll brought this to light in their above piece, and the implications can have a considerable effect on how you split test. To better illustrate the idea—

A one-tailed test only looks for positive correlations. It’s like always winning at the blackjack table. Executives who don’t understand (or don’t care about) the finer points of split testing love one-tailed tests, because they seem to always throw out big wins. It sounds great for the site and the customers, not to mention job security – but there’s a big issue, in the form of all of the other statistically-significant things that didn’t make it into the analysis.

And that’s where a two-tailed test comes in.

A two-tailed test measures both the positives and negatives, along with anything else that happens to come up in the process. You need more data this way to reach a conclusion, but the conclusion uncovers many different points that a one-tailed test doesn’t look for.

But that doesn’t always mean that a two-tailed test is the right answer. There will be times when you want evidence to correlate a positive change with a specific test. And one-tailed tests require far less resources to conduct. It’s just important to know what you’re measuring and how much of it is really having a direct impact on your bottom line and your conversion rate – and how much of it is just made to impress.

The Mountain of Statistical Significance

I’ve written in the past about statistical significance, particularly why it matters and how it affects your tests.

Many split tests tend to nudge you toward calling a winner before enough information is collected. Even if the test picks a winner with 90% statistical significance, and you implement the change(s) on your website, of course your audience is going to observe it; especially if they’ve visited previously, because something’s different. That may trickle down into your conversion rate, but only temporarily.

And what if you don’t have enough traffic hitting the mark to consider a test statistically significant? Rather than tell you the honest truth that you need more visitors to really see a measurable result, most services will level the playing field and tell you that you can get by with “just 100” users or some other abysmally low number.

It’s not fair to you, and it’s doing a huge disservice to everyone involved.

So What Can You Do?

This piece isn’t meant as a giant swipe to conversion optimization platforms. But it is meant to inform you as to what you’re really getting. Thankfully, there are some steps you can take to make your split tests really work for you and get you the kind of results that truly and fully impact your conversion rate – consistently.

So when it comes to split testing, how can you know if your tests are really giving you deeper, more substantial results rather than simply scratching the surface?

You can still use your favorite tool to create a test, but to truly see how well it performed, use the Kissmetrics dashboard to create an A/B test report. Kissmetrics integrates with a wide variety of split testing platforms. Then, simply create a new report and select the experiment and the conversion event.

Let’s assume we want to see which free trial offer brought in more paying subscribers. We’ve let our test run, and now it’s time to load the results:

Right now, there’s not much you can see that’s different than what the split test creation/testing platform gives you. You see common things like how long the test was run, how many visitors there were, and how many conversions between the two. From this example, we can clearly see that the variant beat the original.

Time to switch everything over to the variant, right?

Wait – we’re not quite done yet.

This is where most split test results pages end – but we’re just getting started.

Because we wanted to see how many people we actually got signed up as a result. To check this, we switch the conversion event from “Signed Up” to “Subscription Billed – New Customer”.

Click “Run Report” and we’ll see that surprisingly, our original test beat out the variant for the actual number of subscriptions sold to new customers. So while the variant beat out the original on free trial sign-ups – the original did a better job at converting those sign-ups into paying customers, which is the kind of conversion event we really want in the end.

The A/B Test Report is a great way to know which one of your tests really converted in the end. And you can do so much more with it, including testing for outcomes that are outside your sales funnel – like this one.

See a quick demo of how the A/B Test Report works by watching the short video below.

Segment First, Then Test

Rather than conducting a blanket test with all users, segment them. Visitors versus customers. Mobile customers versus desktop customers. Visitors who subscribed via email versus non-subscribers. The list is endless. This way, you’re making a true apples-to-apples comparison and getting more legitimate results.

Run The Test Again

It might seem counter-intuitive, but a lot of these so-called lifts can be an illusion. Running the test again, or running it for a longer period of time, can help you get a better focus on how – and even if – the results are significant.

In his guide “Most Winning A/B Tests Are Illusory”, Martin Goodson, research lead at Qubit, makes the case for statistical power while eliminating false-positives and other points that can muddy the waters of your split test.

It’s a short, but nevertheless enlightening read, particularly if you don’t come from a statistics background (like me!).

Overcome Assumptions

A lot of A/B testing is grounded in a hypothesis. If we change X, will it have an effect on Y? But in the process, there are lots of assumptions being made. For example, you might assume that your visitors are all coming from the same background – all young moms or avid fishermen or gym-goers. And that might result in huge lifts – for the wrong target audience.

All of these points shouldn’t make you want to give up on split testing entirely, but rather realize what you’re getting and what to really dig into and look deeper at so that you don’t just get big numbers – you get big numbers that translate into definitive, long-term increases across the board.

What are your thoughts on today’s split testing platforms? What about the tests you’ve conducted? Share your thoughts with us in the comments below.

About the Author: Sherice Jacob helps business owners improve website design and increase conversion rates through compelling copywriting, user-friendly design and smart analytics analysis. Learn more at iElectrify.com and download your free web copy tune-up and conversion checklist today!